TL;DR;

Do you get giant packets upto 64K bytes from Wireshark or Libpcap ? It could be due to GSO (Generic Segmentation Offload) a software feature enabled on your ethernet card.

This is probably going to be really obvious to the packet gurus, but a recent investigation took me by surprise.

I always assumed that packets captured using libraries like libpcap would have the same size of the link layer MTU. The largest packets I have seen on ethernet links are 1514 bytes. I have also seen some packet captures containing Gigabit Ethernet Jumbo frames around 9000 bytes. I had read a lot about TCP Segmentation Offload but had never seen a capture of it. To sum it up, a really big packet to me meant 1514 bytes for the most part.

Imagine my surprise when I looked at some packets captured by Trisul and some were about 25,000 bytes. Some were even 62,000 bytes.

Some facts:

- I was on a Gigabit Ethernet port (Intel e1000), but it was running at 100Mbps.

- TCP Segmentation Offload which could cause such huge packets was turned off.

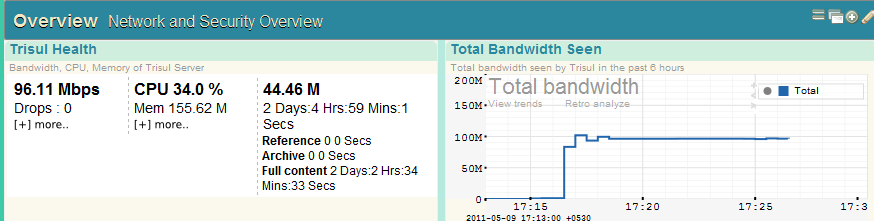

- This only happened at high speeds of 96Mbps and only for TCP

- This only happened on Ubuntu 10.04+ and not on Centos 5.3+

Packet capture file in Wireshark format (tcpdump)

- superpackets.tcpd – wireshark capture file containing super packets

ethtool output

unpl@ubuntu:~$ sudo ethtool eth0

Settings for eth0:

Supported ports: [ TP ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Supports auto-negotiation: Yes

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Advertised pause frame use: No

Advertised auto-negotiation: Yes

Link partner advertised link modes: Not reported

Link partner advertised pause frame use: No

Link partner advertised auto-negotiation: No

Speed: 100Mb/s

Duplex: Full

Port: Twisted Pair

PHYAD: 1

Transceiver: internal

Auto-negotiation: on

MDI-X: off

Supports Wake-on: pumbag

Wake-on: g

Current message level: 0x00000001 (1)

Link detected: yes

unpl@ubuntu:~$

The test load :

Around 96-100Mbps consistent generated via iperf. All traffic measured and logged down to packet level by Trisul. In my tests, superpackets only showed up at really high data rates. If I dropped the load to say 30 Mbps, all packets would be a maximum of 1514 bytes – as expected.

Drilling down into the iperf packets :

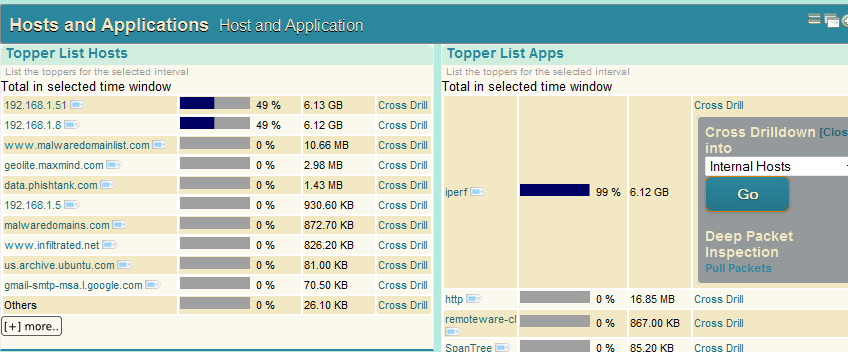

Viewing the top applications contributing to the 96-100Mbps load, we find iperf at the top as expected. I drilled down further into the packets by clicking the “Cross Drill” tool.

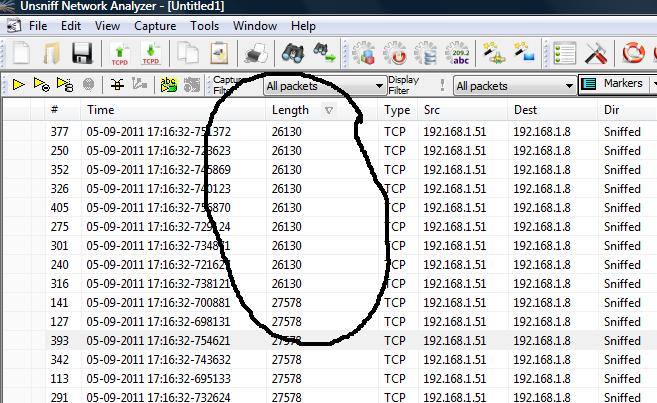

Found really big packets in the list :

Clicking on “Pull Packets” gave me a pcap file I imported into Unsniff. Immediately the packet sizes took me by surprise.

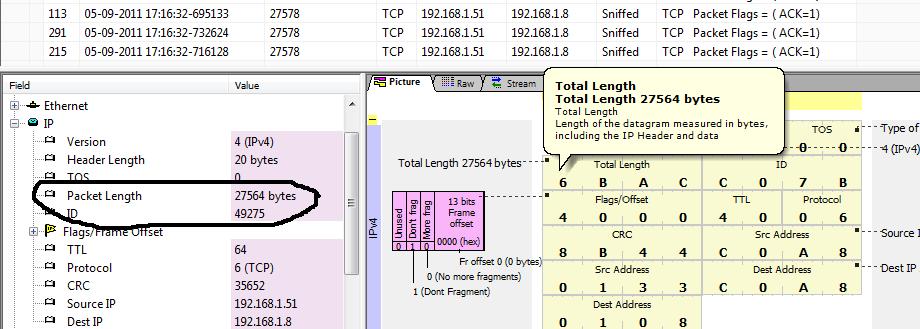

How can we have 27578 byte packets ?

It turns out that somehow the IP packets have been merged into one big packet and instead of passing multiple ethernet packets up the stack, the kernel is reassembling it as one IP packet. The IP Total Length field is adjusted to reflect the reassembled super packet. Unsniff’s packet breakout view shows this clearly.

This caused me some grief because I suspected there were some packet buffers in Trisul that maxed out at 16K. So I had to find what these superpackets were.

Enter Generic Segmentation Offload

After much Googling I found some links that looked promising. Recall that the adapter was in 100Mbos mode and had TCP Segmentation Offload off . I later noticed it Generic Segmentation Offload On . This is a software mechanism introduced in the networking stack of recent Linux.

|

1 2 3 4 5 6 |

The key to minimising the cost in implementing this is to postpone the segmentation as late as possible. In the ideal world, the segmentation would occur inside each NIC driver where they would rip the super-packet apart and either produce SG lists which are directly fed to the hardware, or linearise each segment into pre-allocated memory to be fed to the NIC. This would elminate segmented skb's altogether. |

End piece

If you are analyzing packets in Wireshark and run into super sized packets. They are probably due to the Generic Segmentation Offload feature. Those who are, like me, write code for packet capture, relay, and storage, pay extra attention. Do not use arrays of sizes like 16K while buffering packets. You need to use the maximum allowed IP Total Length size of 64K.

PS:

Still doesnt explain why this is only for TCP

Thanks for sharing this sample with the pcapr community!

http://www.pcapr.net/view/dhinesh/2011/4/2/0/superpackets.tcpd.html