tldr;

Want traffic, flow, and packet visibility along with IDS alert monitoring ? Trisul running on the Security Onion distro is a great way to get it.

Trisul Network Metering & IDS alerts

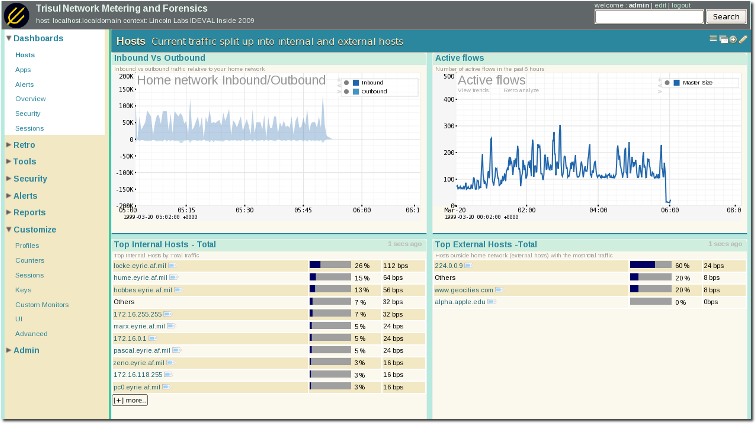

Trisul‘s main job is to monitor traffic statistics and correlate it with network flows and back everything up by raw packets. This is presented in a slick web interface to give you great visibility into your network with drilldowns and drill-sideways available at every stage. Since its introduction a few weeks ago, we have managed to win over quite a few passionate users.

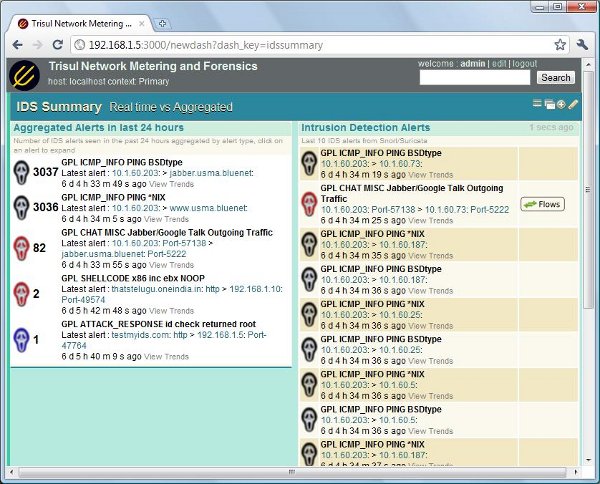

At the moment, most of Trisul’s users are using it for traffic and flow monitoring. A major feature of Trisul is that it can accept IDS alerts from Snort and Suricata and merge this information with traffic statistics and packets. This requires a bit of setup involving Snort/ Barnyard2/ Pulled Pork as described in this doc. I found the Security Onion distro recently and was pleasantly surprised how easy Doug Burks, its author has made it to get this up and running. This is an ideal platform to exercise the alerts portion of Trisul. The only sticking point was Trisul is 64-bit only, courtesy its love affair with memory. We made a 32-bit package specially for Sec-O. This post describes how to set it up and what it can do for you.

Plugging in Trisul

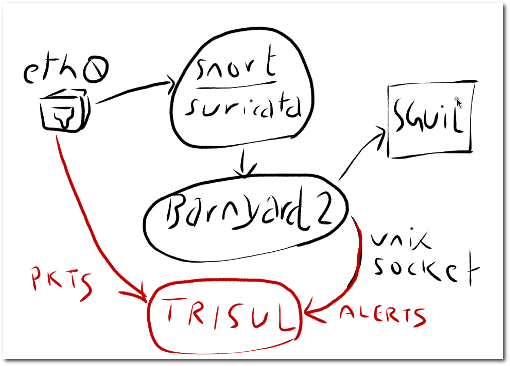

The following sketch illustrates how Trisul plugs into the Sec-O components.

- It accepts raw packets from the network interface directly

- It accepts IDS alerts from barnyard2 via a Unix Socket

You can use SGUIL, the primary NSM application in Security Onion side-by-side with Trisul.

Installing Trisul

5 min screencast

Download

You have to get these from the Trisul download page – scroll to the bottom to access Ubuntu 32 bit builds

- DEB package (Trisul server)

- The TAR.GZ package (the Web Interface)

Install

Follow the instructions in the Quick Start Guide to install the packages.

Once installed, run once to setup everything

cd /usr/local/share/trisul

./cleanenv -f -init

/usr/local/share/webtrisul/build/webtrisuld start

Configure

Change data directory from /usr/local/var to /nsm

Trisul stores its data in /usr/local/var, Sec-O likes to store it in /nsm. You may wish to change to /nsm/trisul_data

To do so

mkdir /nsm/trisul_data

cd /usr/local/share/trisul

./relocdb -t /nsm/trisul_data

Edit trisulConfig.xml file

You want to change two things in the config file. The user running the Trisul process and the location of the unix socket that barnyard2 writes to.

- Change the SetUid parameter in /usr/local/etc/trisul/trisulConfig.xml to sguil.sguil

- Change the SnortUnixSocket to /nsm/sensor_data/xxx/barnyard2_alert

Edit barnyard2.conf to add unix socket output

Edit the barnyard2.conf file under /nsm/sensor_data/xx/barnyard2.conf and add the following link

|

1 |

output alert_unixsock |

Start Trisul with mode fullblown_u2

You are all set now, just restart everthing. The trisul runmode must be fullblown_u2.

Stopping

/etc/init.d/trisul stop

/etc/init.d/webtrisuld stop

Starting

You also have to restart trisul,webtrisul, and barnyard2.

trisul -demon /usr/local/etc/trisul/trisulConfig.xml -mode fullblown_u2

/etc/init.d/webtrisuld start

The webinterface for Trisul listens on port 3000. Make sure you have opened it in iptables.

iptables -I INPUT -p tcp --dport 3000 -j ACCEPT

Using Trisul

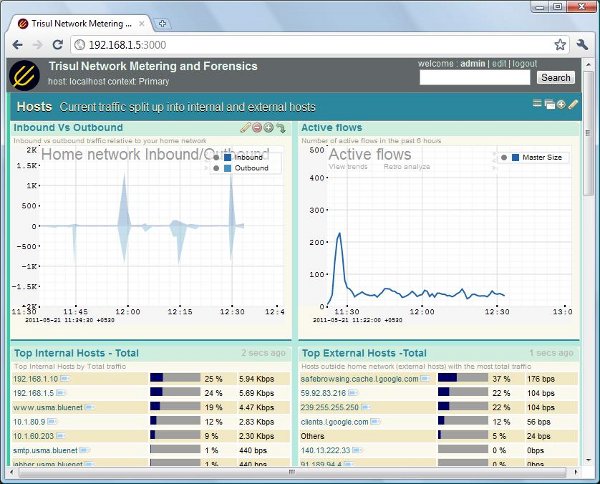

Login to http://myhost:3000 as admin /admin. Here are a few screenshots of what you can do with Trisul. I encourage you to play with the interface and navigate statistics/ flows/ pcaps.

Screencast 2: Beginning Trisul

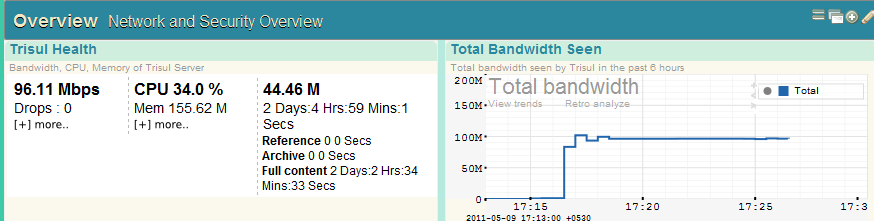

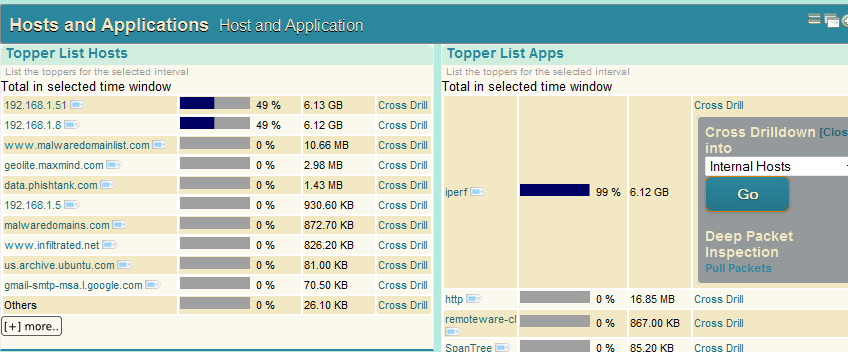

Usage 1 : Traffic metering

Think of it as ntop on a truck load of steroids. Over 100+ meters available out of the box – you can monitor traffic by hosts, macs, as internal vs external, vlans, subnets – even complex criteria like HTTP hosts, content types, country, ASN, web category etc etc.

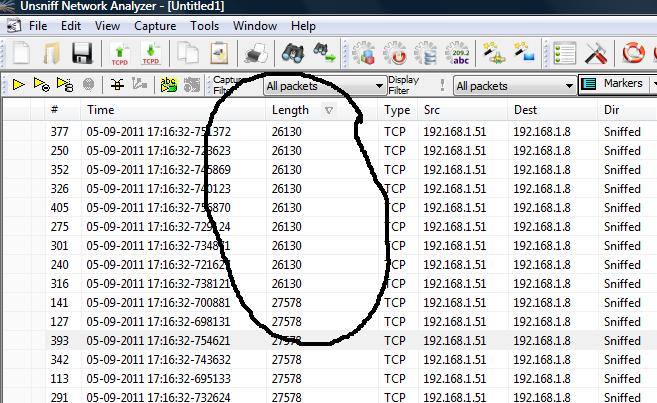

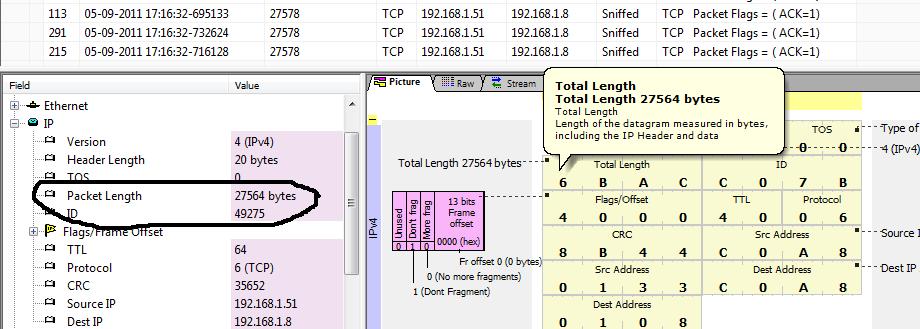

In the sceenshot below, you can click on a host to investigate what its doing, what its historical usage is, exact flow activity or even pull up packets.

Usage 2 : Alert as entry point of analysis

Clicking on Dashboards > Security gets you to this screen. You can click on each alert category to investigate further. You can pull up relevant flows and packets. Watch the screencast below for an example.

Download and try Trisul today

Trisul is free to download and run. There are no limitations other than the fact that only the most recent 3 days are available for investigations. If you like it you can upgrade at any time to remove the restriction. There are no nags or call homes of any kind.